OpenAI just released Structured Outputs in the API 👀

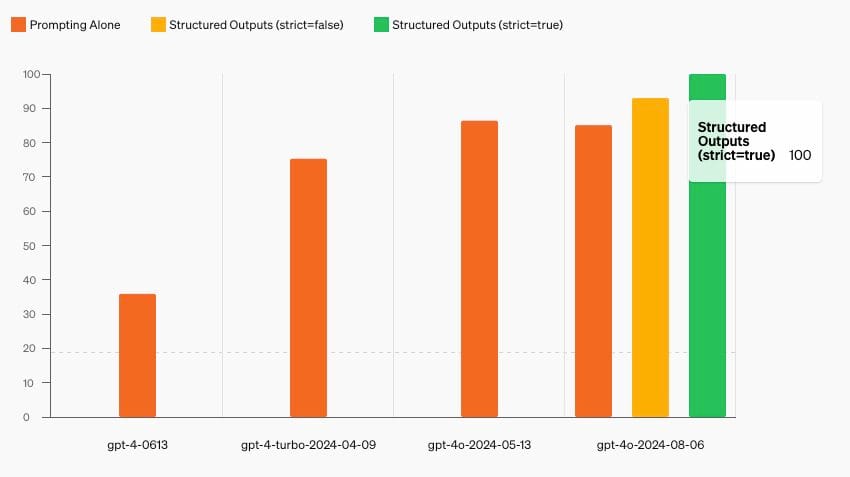

While using Structured Outputs (with "strict mode" enabled), their latest model (gpt-4o-2024-08-06) scores a perfect 100% reliability on their internal evals. This feature aims to enhance the accuracy of generated outputs to match specified schemas.

Diving deeper, there are caveats, such as having some schema requirements and type limitations, but it is still an improvement on the current approaches to structured output generation. It also looks like their unconstrained model is above 90% reliability as well.

To make this work, in general they're applying constraints to the unconstrained generated token outputs of the LLM based on the schema provided.Specifically, the technique used is Constrained Decoding, where the provided JSON Schema is converted to a set of rules. These rules are then applied during the token generation in the model to ensure the output matches the schema. They also generate this ruleset once and cache for future requests to preserve performance.

A common approach to correct before this might have been re-running the LLM with a self-correction step, whereas with this implementation, you can run this inference once (reduced cost, faster response time) to ensure 100% correctness in your JSON response.

OpenAI has also added this native support for this feature in their Python and Node SDKs for Pydantic or Zod schemas 😀 respectively.

If you'd like to learn more about this feature, check out their full write-up here:

https://openai.com/index/introducing-structured-outputs-in-the-api/

For further reading on Constrained Decoding for LLMs, consider these references:

https://arxiv.org/pdf/2405.00218

https://arxiv.org/pdf/2305.13971