Github Copilot: A qualitative review

Github Copilot, a qualitative review: Here, we analyze the overall usefulness of Copilot (via GPT4o, o1) as an assistant in your workspace.

Introduction

I've been working with OpenAI's chat-based models since November 2022 and have used a variety of OpenAI models via chat and API, across various implementations, and began using Copilot as my AI-based code assistant in early 2024.

GPT4, 4o, and o1 models have excelled at code generation tasks while maintaining context relevance when responding to subsequent requests (i.e. longer conversations, more initial context). The reasoning, in combination with in-context search improvements has led to satisfactory code generation through reasoning over longer contexts (conversation, files, previous generations, etc...), which increases the viability of production use when integrating into IDEs as an assistant or tool. As a result, we've seen the emergence of numerous AI-based code editors, assistants, and autonomous code generation platforms.

If you're already with working with GPT4+ models for code generation, Copilot has features that feel very familiar. The three experiences you'll be using are the Chat, Snippet Editor, and the Advanced Code Completion. Copilot Chat is, as expected, an integrated chat in your IDE, while the snippet editor appears when selecting a block of code in a particular file. The code completion is akin to OpenAI's completion models, where the model is simply predicting the most likely next N tokens based on your last M tokens, which appears when you type. We will be primarily focusing on Chat in this article.

Goals

Here, we analyze the overall usefulness of Copilot (via GPT4o, o1) as an assistant in your workspace. For some additional context, we will also be analyzing a simple request to add an Auth interceptor to a micro-service in Go.

- Reasoning over @workspace and attachments.

- Unpack the underlying implementation that powers Copilot.

- Determine its overall usefulness as an AI-based code assistant, and what have been the most helpful features (for me) in comparison to vanilla ChatGPT.

Copilot Chat

@workspace

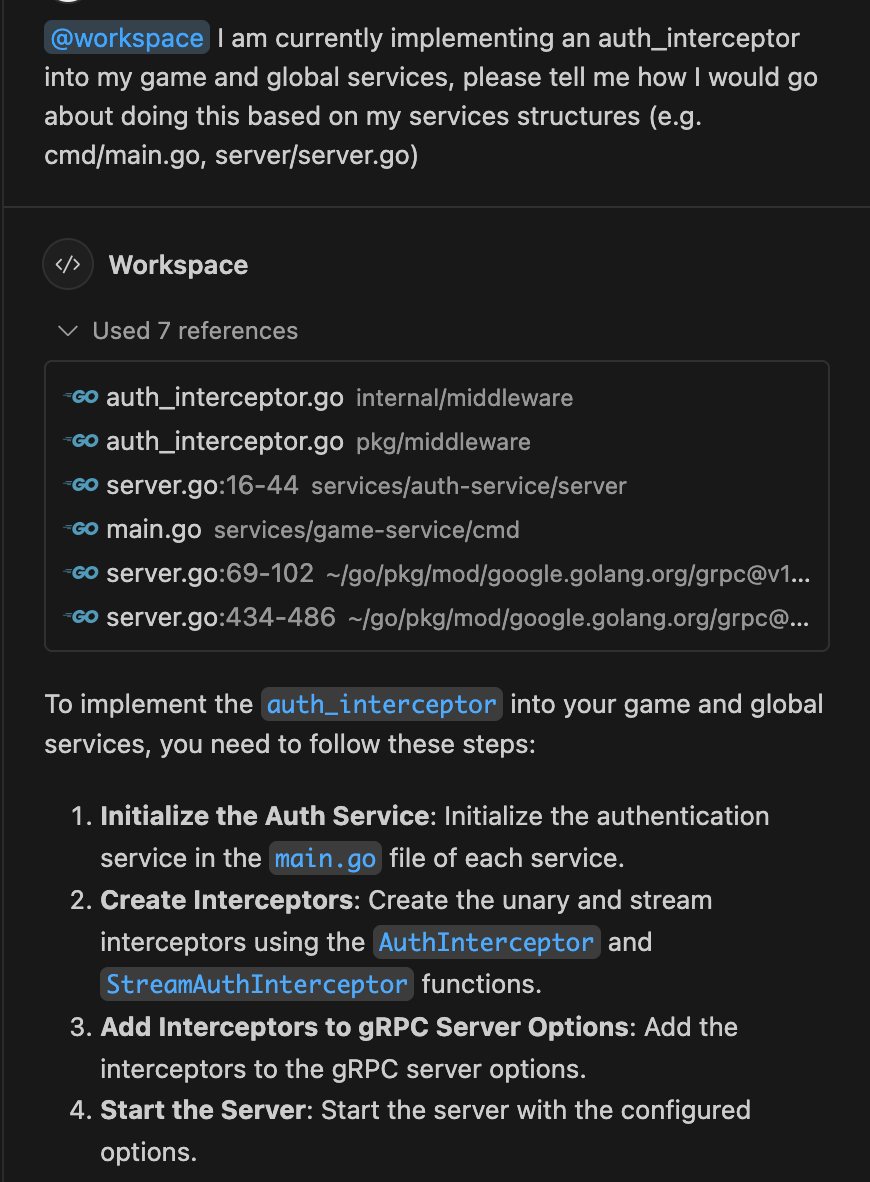

The @workspace callout is intended to analyze the entire workspace based on the prompt provided, and retrieve references from the workspace, inject it into the context, and reason over the context to answer your question or execute your task.

The Process

Generally, retrieval at the @workspace level will start with original references, and then expand to related references that may be needed to resolve the problem (either through graph or native linked reference features). This seems to be the first task of the planner – to extract what files are needed to answer the question / resolve the problem.

In Copilot's response, after grabbing initial references, it noted examples with additional files in the workspace that were not originally added as a reference. This implies there are internal steps and tool calls to complete one chat interaction, or that additional workspace metadata remains in the context that can be referenced.

While I expected it to initially load references the global-service/cmd/main.go and related server.go files, the resulting response did generate hallucination-free viable implementation for the global service based on my original prompt.

Problem(s)

In the case above there were two references to the auth_interceptor.go, even though only one existed. This implies there is an internal cache that sometimes does not refresh appropriately, yielding incorrect references. Because the internal context is not exposed, it is hard to see what influence this has over the response – but its assumed to affect future code generations if cache is not busted appropriately due to stale code in the context.

Summary

In summary, the @workspace integration with 4o and o1 is dynamic and flexible as you would expect with these models. Both Copilot and vanilla ChatGPT generate similar outputs when shared with same files. While the initial conditions (i.e. files required) can be provided by the user, Copilot does seem to modify what it is retrieving internally if needed to get to the completion of its plan.

In the cases where prompts were too broad, the file retrieval when referencing the workspace led to poor reasoning due to the abundance of extraneous OR irrelevant files that were added in the context. When the prompts were well-defined, and specific file call-outs were mentioned, it led to more successful generations of code that better matched requirements.

There also might need to also be better cache-busting techniques that might relate to workspace manipulations outside of Copilot (seemingly an edge case). Without using @workspace or attachments to contextualize your chat, the benefits of using Copilot Chat vs. standard OpenAI chat models start to diminish.

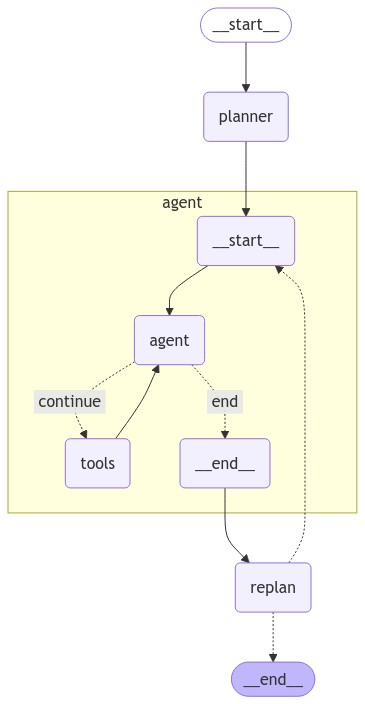

Implementation Unpack

The Copilot implementation can be generally be modeled as a combination of a Plan-and-Execute Agent, ReAct Agent (Execution Agent), with a custom Retrieval Augmented Generation (RAG) implementation for better retrieval performance and optimized context management. This is an approach that I can see working for a Copilot-like implementation, which may or may not be correct.

From analyzing a chat answer from Copilot, it seemingly will take suggestions for files and match documents contextually and by name, along with grabbing related documents by either an internal graph or via linked references (i.e. s.Serve(lis) is a reference to 3rd party gRPC library, so retrieve the ref. chunk). The references are injected into the context as additional relevant chunks, along with the original full document references. This ensures that if there are any custom libraries that are used but not in the model's internal knowledge, Copilot knows of those methods that are being used in the originally discovered documents.

In the earlier example, the original prompt requires work on both global-service and game-service. The original RAG search returns only game-service server.go as a reference, while later in the chat (in the same response), it correctly generated updates on both game and global server.go files.

This implies that the internal plan persists some other information other than the original file references or a "replan" step occurred based on the agent model above to identify that the changes were required in the global server.go file as well.

Summary

Generally, Copilot augments the standard 4o and o1 reasoning models and responses by using relevant files from your workspace to contextualize the answer. In additional to basic RAG, there are more advanced RAG components like chunking related retrieval, along with additional retrieval during steps of the plan that align its responses in accordance to your workspace.

Additional Capabilities

Having access to your workspace is an advantage that you can leverage greatly to accelerate your development through simple prompting. We'll walk through some examples below.

@github

Flagging @github in the chat gets answers grounded in web search, code search, and your knowledge base and Github repository.

Explain, Fix, Tests

Copilot also comes with additional prompting flags such as /explain, /fix, /tests which inject additional context into the request. Calling these prompting flags while using @workspace or attachments would trigger the expected generations such as explaining a file, attempting to provide a fix for a particular code block, or generating a test for a particular file. These are simply structured prompt templates that are usable which can save time.

YAML Files

Because your workspace is part of the context, now you can prompt to generate deployment templates with little effort. Copilot is good at producing Github Actions, Dockerfiles, Docker Compose, Envoy, Kubernetes Deployments/Services, Terraform Templates, and other yaml configuration files that are generally well-documented. When you approach the generation of these files, it's best to prompt for a specific version within the knowledge scope of the model, otherwise you may find it hallucinate or mixes features from different versions of these tools.

Conclusion

As an Engineering Leader, I would recommend using Github Copilot due to its deep integration with developer IDEs, and generally recommend augmenting your engineering team with AI-based IDEs and Assistants for the productivity boost. Make sure to check out what tool aligns with the compliance, data privacy, and other regulatory requirements of your organization before implementing.

The future of IDEs

While we talked about the current state of Copilot Chat in this article, there are other approaches to AI-Coding Assistants that automate a lot more of the process such as Cursor, Bolt.new, and Github's up-and-coming Copilot Workspace which all approach codebase generation with an emphasis on automating project creation from zero (project initialization, code generation) to deployment (move to infra). We'll be deep-diving into Copilot Workspace upon its release.

Link to Copilot Workspace Waitlist