Critical Miss

We explore the creation of an LLM-based D&D Agent to enhance or replace a traditional Dungeon Master in the game loop.

Goal

- Build a fully playable multiplayer LLM-based D&D Agent

Challenge(s)

- Story continuity across various players, NPCs, and scenes (context management) and timeline.

- Story uniqueness across various playthroughs (creative seeding via prompting).

- Story length vs. forgetfulness (memory management).

- Reasoning over long contexts (memory management).

- Context limitations (context optimization).

High-Level Implementation

- LLM-based D&D ReAct (Reasoning [via CoT] + Acting [action-plan generation]) Agent.

- LLMs in use are gpt-4o (for majority of game state management) and gpt-3.5 (summarization, extraction, observations).

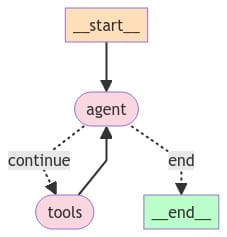

- Tool-calling via LangGraph's native tool-calling implementation.

- Python flask-based API, with LangGraph implementation for React Agent.

- ChromaDB for Vector Store

- MongoDB for Document store

- LangSmith for debugging and creating data sets for further post game analysis

- Playground-style Interface built in React (the JS framework) to test various models, interactions, and memory management.

Agent

Base Architecture

We chose a ReAct Agent implementation to leverage it's improved trustworthiness and interprebility of prompts over traditional approaches. We also leverage Function Calling so that the Actions that the Agent can take can trigger system operations around game and player state management.

from langchain_openai import ChatOpenAI

from langchain_core.tools import tool

from langgraph.prebuilt import create_react_agent

# sample tool

@tool

def create_npc(name):

"""

Some method to create npc

"""

return f"npc {name} created"

# sample react agent call

def route(prompt, messages, tools):

model = ChatOpenAI(model="gpt-4o", temperature=0)

inputs = {"messages": messages}

# prebuilt react agent

graph = create_react_agent(

model, tools=tools, messages_modifier=prompt)

for s in graph.stream(inputs, stream_mode="values"):

message = s["messages"][-1]

if isinstance(message, tuple):

print(message)

else:

message.pretty_print()

if __name__ == '__main__':

prompt = "You are a Dungeon Master. This is fictional gameplay..."

messages = [("user", "Hello, Dungeon Master! Start the game!")]

tools = [ create_npc, ... ]

route(prompt, messages, tools)Sample implementation of basic react agent using LangGraph

Memory

Overview

To build out the D&D Agent's memory, it requires a series of steps that are pre-requisite to populating the Game State. Memory optimization in an LLM is key to maintaining a minimized evolving context, while maintaining overall reliability of contextual responses. Therefore it is important to start with a broad set of memories, and then work on narrowing down the context using various techniques. To do this, we first explore all the available metadata that can be generated during the game state, and subsequently analyze how to later retrieve that information from various systems.

Game Metadata for Context

For in-game metadata, we rely primarily on a document store for populating the main game state and scene state objects. Below we dive deeper into the context objects that exist. The query approach for this type of metadata will be based on the message relations (e.g. player, campaign), while we try to retrieve observations and actions via other approaches.

Game State

- Last N messages between Player(s), Agent, NPC(s).

- What's the current Scene, for the current Player.

- What are the Available Scenes to travel too.

- What are the overall Game Objectives.

- What are the current Scene Objectives that lead to the Game Objective.

Scene Context

- Players in scene - Erwin the Warrior

- NPCs in scene - Elari the Bartender, Gerald the Bard

- Relevant Interactions & Observation - Erwin purchased a Beer from Elari

- Potential Conflicts (between players, npcs) - Rowdy Orcs in the Bar

- Scene Objectives - Learn more information about the Mountain Caves

- Reflections, Interactions and Facts - Metadata generated over the course the interaction or turn

Observations and Actions

For observations, we look at using an LLM to generate any observations after a turn has completed (over a moving window of activity in the game) that might be relevant to future game states. The goal of an observation is to capture insightful information about a scene that can be used as a memory.

@tool

def create_observation(turn_id):

"""

Create an observation of the turn.

Returns:

- str: A message indicating that the observation has been created.

"""

# TODO: Logic to create an observation of the turn

# TODO: Query the messages from the turn

# TODO: Create an observation of all the messages

# TODO: Store the observation in memory

DungeonMasterTools._turn_id = turn_id

print(f"Creating observation for turn {turn_id}...")

return "Observation created for turn {turn_id}."We also allow the LLM to generate any relevant observation using tools during the turn. We also extract keywords and questions that could be associated with the observation, and embed those along with the observations themselves for later retrieval options.

@tool

def create_memory(memory, keywords, importance):

"""

Create a memory with the given content and keywords.

Args:

- memory (str): The content of the memory.

- keywords (list): A list of keywords associated with the memory.

- importance (int): The importance of the memory.

Returns:

- str: A message confirming the creation

of the memory to the LLM.

"""

# Logic to create a memory

metadata = {

"keywords": keywords,

"importance": importance,

"user_id": DungeonMasterTools._user['id'],

"campaign_id": DungeonMasterTools._campaign['id'],

"created_at": datetime.now().isoformat()

}

DungeonMasterTools._memory.store(memory, metadata)

return f"Memory '{memory}' of importance

{importance} and keywords '{keywords.join(', ')}'."Observations and Actions

- As the LLM, generate questions that might retrieve relevant memories.

- As the LLM, generate similar memories (hallucinate) it may have had that are relevant to the context.

- Use a form similarity retrieval (Cosine, Hybrid, BM25) to retrieve the initial batch of memories based on various approaches above.

- In addition, a weighted retrieval based on relevance, importance, time decay has been suggested to be applied to retrieval as well.

- Re-rank the set of memories using a reranking technique.

- Trim the re-ranking and return top-k memories (observations and actions).

- Inject re-ranked observations and actions back into the game context.

- In preliminary testing it was shown that observations or actions don't necessarily need to be from the same scene to be relevant.

References